I have moved my blog. I’m now blogging at http://www.yvespeirsman.be.

A New Framework for Semantic Models

It’s been an exciting few years in Natural Language Processing. One of the most important evolutions is the emergence of Deep Learning as an extremely successful technique in models of natural language. Deep learning goes back to the early days of research in neural networks, but has recently gained more attention thanks to some drastic improvements in training procedures. In the wake of its academic success, Google is fully embracing Deep Learning, and Textkernel, too, the NLP company where I used to work, has integrated Deep Learning in its CV parsing software.

One of the areas where Deep Learning still had to prove its worth was computational semantics — the area of my own PhD research. Indeed, I remember experimenting with some early Deep Learning implementations at Stanford back in 2010, but without the success I expected. Four years later, things are looking quite different. At ACL, the yearly conference organized by the Association of Computational Linguistics, last month, Baroni, Dinu and Kruszewski presented a paper that compared Deep Learning models of word meaning with their classic count-based models.

Baroni et al. present a very complete picture, with a variety of experiments on all kinds of linguistic tasks, from the typical synonym test to word-based analogical reasoning. They were expecting quite mixed results, with different models scoring well on the different tasks. Their results took them by surprise. The Deep Learning models of word meaning outperformed the classic count-based models on nearly all tasks, and often by a considerable margin. This was particularly baffling since they simply downloaded Google’s word2vec toolkit and used some of the standard parameter settings suggested by the developers. Who knows what will happen when more specific approaches are applied to the tasks?

It’s clear now more than ever that Deep Learning is here to stay. I’ve already downloaded word2vec myself, and I’m looking forward to doing some experiments to familiarize myself with the software and models. Stay tuned for some early results!

When the Government Hinders Innovation

Last week at work we held a brainstorm about leveraging Open and Big Data for new products. There was agreement between all participants that the legal domain is due for some long-awaited innovation, and that new data technologies can help us develop some great new products. We have the technologies and we have the ideas. The only problem is that in Belgium, we just don’t have the data.

MOOC Search, a Careerhack web app

About a month ago I learnt about the Careerhack app challenge, a contest organized by the UK Commission for Employment and Skills. Because I’m working on my app development skills, and my previous job at Textkernel sparked my interest in technology for the job market, I decided to hack something together.

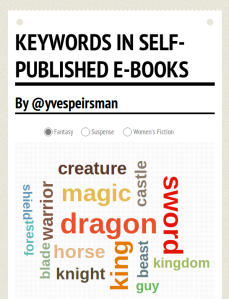

Keywords in Self-Published E-Books

A few weeks ago I downloaded almost 500 books from Smashwords, a website where independent authors can self-publish their e-books. All books are labelled with categories, like Fantasy, Suspense and Women’s Fiction. It’s a perfect setup for a comparison of these different genres.

One quick way to do this is to compute the keywords for the genres. Keywords are words that appear more often than we would expect on the basis of their general frequency. So, I (or rather, my computer) counted all the words in all 500 books, and compared their frequencies in the three genres above with that in other genres. I then selected the most typical keywords on the basis of their log-likelihood ratio.

Definition extraction from legislation

Publishers used to sell just texts. With the arrival of the internet, they put some of these texts online. That worked for a while. But in these days of the Google Knowledge Graph, Wolfram Alpha and IBM Watson, selling texts is not sufficient anymore, whatever the channel. What publishers need to sell is knowledge.

Publishers used to sell just texts. With the arrival of the internet, they put some of these texts online. That worked for a while. But in these days of the Google Knowledge Graph, Wolfram Alpha and IBM Watson, selling texts is not sufficient anymore, whatever the channel. What publishers need to sell is knowledge.

Modeling book endings (Part 1)

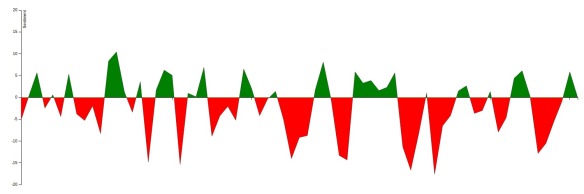

I love reading. I’m fascinated by machine learning. And I’m convinced that bringing the two together, can open up new ways of exploring and enjoying literature. This weekend I wrote a simple program that analyzes the sentiment in books. By looking at its evolution through the pages, I wanted to figure out if it is possible to tell the difference between happy and unhappy endings.

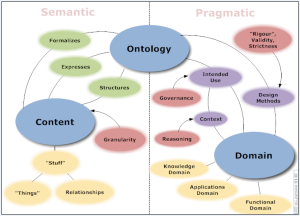

Ontology Learning from Italian Legal Texts

Ontologies capture our knowledge of a specific domain. Because this knowledge is often encoded in unstructured natural language text, it is essential we develop reliable techniques that automatically extract ontologies from such sources. One logical area of application is the legal domain, where valuable knowledge is dispersed across thousands of documents.

Ontologies capture our knowledge of a specific domain. Because this knowledge is often encoded in unstructured natural language text, it is essential we develop reliable techniques that automatically extract ontologies from such sources. One logical area of application is the legal domain, where valuable knowledge is dispersed across thousands of documents.

Automatic Detection of Arguments in Legal Texts

Legal professionals search many long texts for arguments to substantiate their claims. Natural Language Processing can help them in their search for information. In Automatic Detection of Arguments in Legal Texts, Moens, Boiy, Palau and Reed show how a classifier can be trained to automatically identify arguments in a variety of text sources.

ReadTweet, a book discovery app

Two days ago, voting started for the BookSmash Challenge, a competition for innovative app ideas in the world of books. The challenge motivated me to realize an idea that I’ve been toying around with for some time now: a book discovery app supported by language technology. ReadTweet takes someone’s Twitter feed, identifies the topics that person tweets about, and then makes book recommendations based on those topics.